How Do You Use a Neural Network in Your Business?—AI Show

November 20, 2018 in AI & Engineering

Share

In this blog post

- Breaking down tasks

- Think: What's my data set?

- What is gradient descent?

- Designing a simple system

- The real world has kinks and curves

- Using an off-the-shelf system

- How do you define what your error is?

- How far away is the output from the model compared to what the actual truth is?

- Maintaining a deep neural network

- What's the key point?

Scott: Welcome to the AI Show. Today we're asking the question: How do you use a neural network in your business?

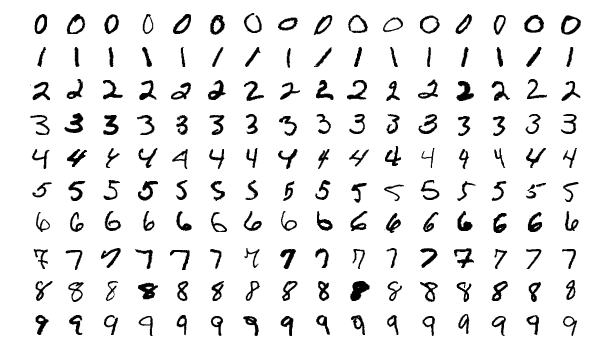

Susan: Well let's just talk about what people think of neural networks, simple ones. There's sort of the classic one, the very first thing you ever build when you're learning how to deal with this stuff, the MNIST digit predictory. You're familiar with this?

Scott: Yes, MNIST.

Susan: It's like Modified National Institute of Standards and Technology.

Scott: Handwritten digits. 28 by 28 pixels. Gray scale.

Susan: Gray scale, basically they're handed to you on a silver platter and centered. Absolutely useless without a massive ecosystem around it to feed those digits into you in the perfect way.

Scott: What do you mean?

Susan: Well, you don't just take a picture of a letter and suddenly everything works.

Scott: Not that simple, right?

Susan: It's definitely not that simple.

How do you take something like a task of digit recognition?

How do you break it down?

How can you use deep learning to actually make an effective, useful model?

How do you create a tool that you can use in some meaningful way?

Breaking down tasks

Scott: In the real world, you have a task and you want to do something with a neural network. In this case it's like, I have a camera and I want to take a picture of something and I want it to figure out what is written on some letter. You have handwritten digits, just the digits - zero, one, two, three, four, five, six, seven, eight, nine - and tell me what the digits are. Simple task, right? A human can tell you right off the bat, they can just read them right off.

Susan: This is sort of the difference between accuracy in the machine learning world and utility. You can have the most accurate classifier in the world but it's completely useless because you can't feed it that data in the real world.

Scott: You want to send letters to the right place at the post office or something and you want it to be mechanized. But, people have handwritten everything, so hey, a hard problem, you used to do it with humans, now you want to do it with a machine.

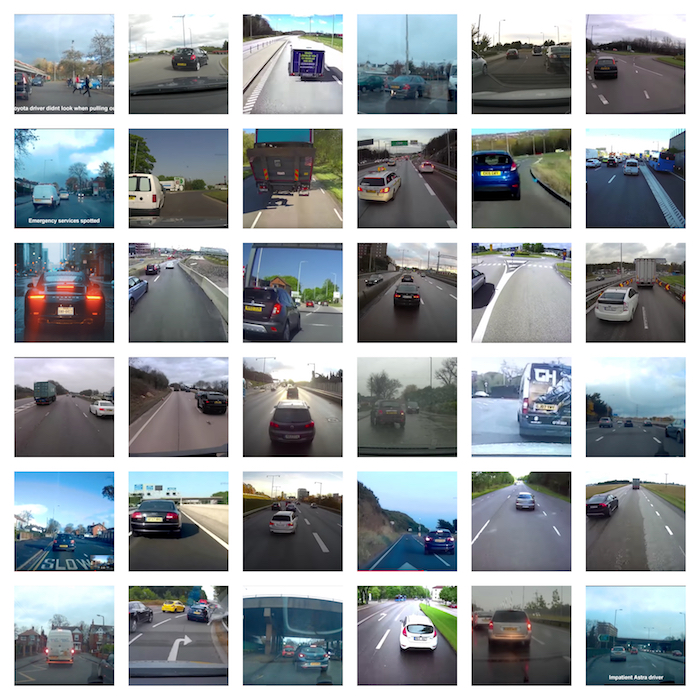

Susan: That's the core idea for today, how do we actually create a machine learning model or use neural networks in a real world situation there? We've got a great example there, digital recognition on a letter or something like that-however in the news they're talking about license plate scanners. What would it take to actually build something like that? How do you actually take an idea and use deep learning in there? What are your big ideas of what you should be thinking about?

Think: What's my data set?

Scott: You have to think: "What's my data set?" That data set has to be at least kind of close to the task that you're trying to accomplish. Is it pictures of handwritten digits that are centered and perfect or is it pictures of license plates on cars that are driving down roads at oblique angles with lots of light on them or smoke? If you have those pictures fine, but do you have them labeled by a human and are they properly labeled? Are they centered or not, are they all blown out and all white, are they too dark, do they have a big glare in them, et cetera?

Susan: Just that first step we've talked about data so many times.

Scott: Very important.

Susan: It's important not only just to have data, but data that represents the production environment that you're going to be in. It's all well and good to have, say for instance, license plate data. But, if it's not taken in a meaningful way, if it's staged with professional cameras, is that going to be as good of a dataset as actually taking footage from the real world and from the equipment that I expect to use and dealing with it that way? Not the version that it is pristine, but the version that's already gone through whatever Kodak's have had their hands on.

Scott: The kind that has been compressed.

Susan: It's been compressed, it's been mangled. By the way, why is it whenever you see video like that it's just absolutely the worst?

Scott: It's always crappy, like it's been shot by a potato.

Susan: It's like Big Foot. Magically, whenever Big Foot shows up, it's on the worst video equipment ever, but you got to think you're about to take pictures of Big Foot and you need to recognize you're going to have that quality.

Scott: But, you also have to be careful. Don't try to boil the ocean. You don't have to get every single angle. Is it snowing, is it raining, is it whatever? Okay, get verticalized, get one thing working pretty well first and then you'll start to see the problems crop up. But, maybe 80% or 90% of your solution is already there and then you tackle those problems later. You don't have to do everything all at once.

Susan: Yeah. That's also another key thing here is be prepared for: iterations on everything.

Scott: Iterate.

Susan: Iterate.

Scott: ... iterate, iterate, iterate.

Susan: Get your first hour of data just so you understand formats and how you might be processing and dealing with it before you spend tens of thousands of hours and umpteen millions dollars collecting data that you then find out is not quite right. That's really heartbreaking.

Scott: Yeah, you can't just guess the answer from the outset, it's too hard.

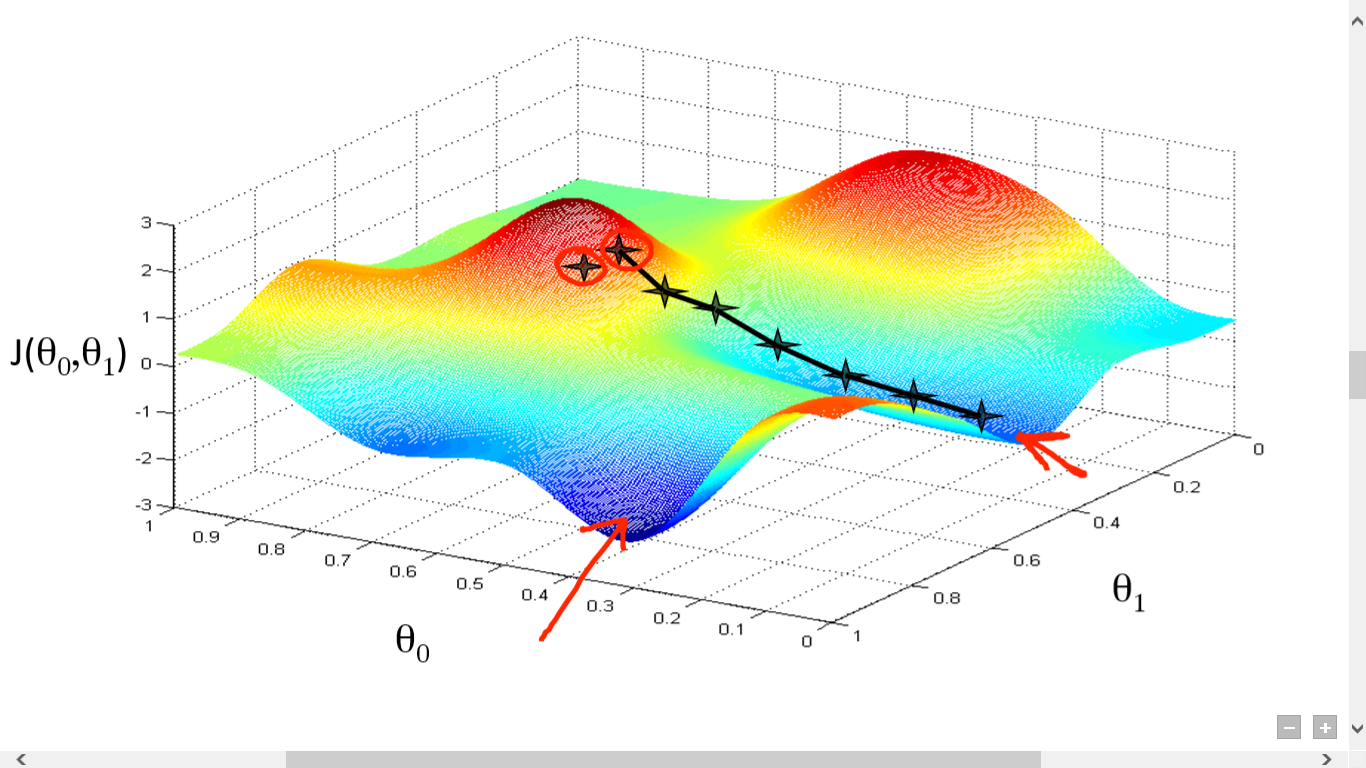

Susan: It's gradient descent, right? You assess, take a step, assess, take a step, assess, take a step, it's pretty classic.

What is gradient descent?

Scott: What do you mean by gradient descent?

Susan: It's the basic algorithm that a huge chunk of machine learning uses to train neural networks. Just like I said, I was giving the example there for assessing, taking a step, assessing, taking a step. The assessment stage is using what's called the gradient and that points in a direction that might be a good way to go for your weights.

Scott: As an example, if you're walking around in a hilly terrain and it's your job to find water you might want to start walking downhill. What do you do? You look around at your feet and you think, "Oh, well the hill is sloping that way, I should go that way." Does that necessarily mean that water's going to be that way? Not necessarily. If you keep going downhill, if there's water around, it's going to be around there. That gets into the discussion of maybe you're in a local optimum, meaning a local minimum in this case and you might need to go over the next ridge and then find an even lower hole somewhere. Still, this is gradient descent. You're looking at the slope and you're moving along that path.

Susan: Gradient descent then applies to machine learning, but it also applies to life. It's a great process/technique that really works well. We were talking about gathering data and the idea behind it is: don't jump in whole hog right away.

When you're designing a system that's going to be useful, you're really actually thinking: how am I going to use this thing. You've got to think about the data you're going to feed it and the data it needs to be fed in order to predicate some answer that you can then use to do something with.

Just, very briefly, think about the MNIST style digit classifier there, data inputs are a 28 by 28 gray scale, centered.

Scott: The pixels are white and black, and gray.

Susan: How do you build that? You've got a whole ecosystem surrounding that, which it's kind to find where the digits are at, it's got to parse them out and do all these different things. When you're thinking about a production environment: I've got these cameras, how am I going to get to the classifier itself or to the network itself, the data into the shape and form that it was trained on in order to make the prediction that I'm going to then use back out there. If you find that the task of doing that is a lot harder than the model itself, you're probably right. The real world is not well normalized.

Scott: If you get your data set right, you get your tools right, you pick your model architecture correct, you get your input and output set, correctly the training's actually pretty easy, you just say, "Go".

Susan Encapsulate the problem, that's really what we're talking about here.

Scott: Define the problem well.

Susan: You need to define that problem. Going back to the iterative idea here, you'll find that you started collecting some data and then you started designing inputs, and outputs, and a model behind it and you realize maybe those inputs, and outputs, and that model can't work with that data so you need to adjust.

You go through this iterative system, but you always have to have an eye on the idea: "I can't do anything that the real world doesn't support."

That's what a lot of people lose sight of when they're learning how to use these tools the first time.

Scott: It has to work for real in a real setting.

Susan: Yeah, they're given these pristine data sets that have well encapsulated some simple problem, or even a complex problem. I've personally spent two weeks working on one dataset just to whip it into shape to be usable.

It's a really hard task to get the real world to be bent and shaped into something that's usable by your particular model. Keep that in mind when you're thinking about a usable model.

Designing a simple system

Scott: Let's go from beginning to end for a simple system. You have a dash cam in your car and you want it to detect license plate numbers and display them on a display in your car. So, you're like a police officer or something, right?

Susan: Officer Susan.

Scott: Officer Susan reporting for duty. You're driving around in your car, you have a dash cam and you want to get a text or display on your screen. Using all the license plate numbers around you, how do you build that system? Okay, you have the camera, then what?

The camera what is it doing? It's looking at an optical signal in the world. As a lens it's taking in light and it's digitizing it, so that's really important. You have to be able to digitize the thing that you're actually trying to measure. It's pretty hard to measure people's thoughts.

Susan: Like you said, you've got to digitize that, you got to be able to put it in some sort of portable processing system if you're doing this real time.

Scott: So maybe it's hooked up to a USB. That dash cam is hooked up to a USB cable that goes to a computer and that computer is just saving an image, 30 of them every second, just saving all of them and just building up tons of data.

Susan: Relevant to the the question about inputs and outputs, we'll just take a base one here. Are you going to try to figure out something over time or you treat each image individually?

Scott: You have 100 pictures of the same license plate. Do you want 100 notifications of that license plate or just 1?

Susan: The image classification world has gone light years, just massive leaps forward since the original work on MNIST and what everybody's familiar with, making a simple multi-layer network to recognize digits. In general, you're going to have to find some way of taking that image that you've digitized which you've been able to feed into some engineering solution that takes a picture n seconds or as fast as it can be processed and then looks for the next one. It takes all that, feeds it in to something that's going to probably normalize the image for light and do some techniques for basic image processing to take care of a whole lot of stuff.

Scott: Try to make it not too dark, not too light.

Susan: The more you can normalize your data, the less your neural network is going to have to work, which is a great thing because the accuracy is going to go up there.

Scott: Sure, but you have a camera and it's got a pretty big view, and the license plate could be anywhere inside.

Susan: You probably have to go into something that's going to detect where license plates are at.

Scott: You probably have two systems.

Susan: At least.

Scott: One that's a license plate detector. It just says, "I think a license plate is here" but that's looking at the entire image. It's looking for the whole thing and then saying, "Oh, I think a license plate is here". Then you have another one that says, "I'm going to snip out only that section and then I'm going to try and read the digits".

Susan: It's going to scale it next. It's going to snip out, scale it. You're going to make certain assumptions, because you know what license plates look like, about how to scale it. It's actually probably a nice problem because of that.

Scott: A fun problem.

Susan: Then finally, you can send it off to your classifier after you've scaled, and sliced, and diced. Now you've got something that might be able to output possible answers that you then display to the person driving. Hopefully they're not texting while they're doing it.

Scott: To build the data set for that, if you're starting out it's like, I want to build a license plate reader for that dash cam but I have no data. What do you start doing, strapping cameras to the front of cars and driving around, right? Then you send it off to crowd source the data labeling or you do it yourself and you sit down and look at images, and you draw a box around the license plates. There's the box around the license plates and you use those boxes, the pixel numbers for those boxes, to say in here, "There was a license plate." That's to get the data to build your first model. That just tells you where the license plate is. Once you've gone through and made all those boxes, now those are just images that are for your next data set. Then you go in and say, "Can I read these or not," or "Can a person read them or not?" Then, type in what that license plate is, the numbers or the letters. Now you actually have a labeled data set at that point and that's how you train the models that we're talking about. Identify where the license plate is, then also what is it, what are the numbers.

Susan: Keep in mind, this is all a very simplified version of this problem.

Scott: We don't have to make it more complicated though. This is a simplified version and it's already really complicated.

The real world has kinks and curves

Susan: This is a real world use case. The real world is going to throw all sorts of kinks and curves at you. For instance, having multiple cars. You start detecting multiple license plates. What happens when a motorcycle splits lanes right next to you? What are you going to look at there? Those kinds of things, shadows hitting you, those people that put the shields over their license plates to make him hard to see, which, I don't know the legality of that.

Scott: A typical system that would identify either where a license plate is or what the numbers are. That would be just a typical CNN network, a convolutional neural network. These work really well, but those things have been done to death. Many academic papers written about them. You can figure out how deep should it be, how wide should it be, which kernels should I use, all these different settings. You just go download one like Pytorch, TensorFlow, and there it is for you. Now, it might not be trained for exactly what your task is, but you don't have to pick the model architecture and you don't have to go through that whole design process to figure out what's going to work or not. You can pretty much take it off the shelf and hit train, and maybe adjust a few parameters. But, you spent an hour, five hours on that section, maybe a day, and then you spent two months on the other stuff.

Susan: That's a great point because there's a lot off the shelf stuff that didn't exist before, especially in the image recognition world. If you're playing in that world, I don't get a good chance to go back there too often, but every time I look there's just more and more amazing tools, especially when it comes to anything on the road. For obvious reasons due to the autonomous driving revolution that's happening. Those tools are just getting a tremendous amount of attention and there's a lot of great work that's out there. If you're thinking about building some of these things look for off-the-shelf solutions first.

Scott: There's won't be an end to end, everything you need to do, but there will be parts of it that you can save a considerable amount of time.

Using an off-the-shelf system

Susan: If you go with some off-the-shelf system, that might dictate some hardware that you don't have access to. This model here is using these tools and these tools you either have to delve into them or figure out how to build something that can mimic them in some way, shape, or form. That becomes a real concern, especially for something in the real world where you don't have a lot of processing power available to do this task.

This comes back to the difference between accuracy and usability. If you have to have a rack of servers sitting in a car to be able to do the task, that's probably not usable, even if it's accurate.

Scott: Maybe a first proof of concept, but this isn't going to be a real product that you ship.

Susan: Driving around with all the fans whirring behind you.

Scott: With $100,000 worth of computers in the back of your car.

Susan: It's great, I can read those license plates now, although probably don't need that much compute for that task.

Scott: We talked about data, super important, we talked about inputs and outputs, loss function.

How do you define what your error is?

Susan: This is really determining more the type of problem you're doing. When we think about a loss function, what we're talking about is the thing that takes truth versus prediction and says how close are they.

Scott: What's my error? How do I define what my error is?

Susan: That loss function has to be crafted in such a way that it can work with auto-differentiation, this ability to what we call backpropagate the error all the way through the model if you're talking about deep neural networks.

Scott: What does that do though, this backpropagation?

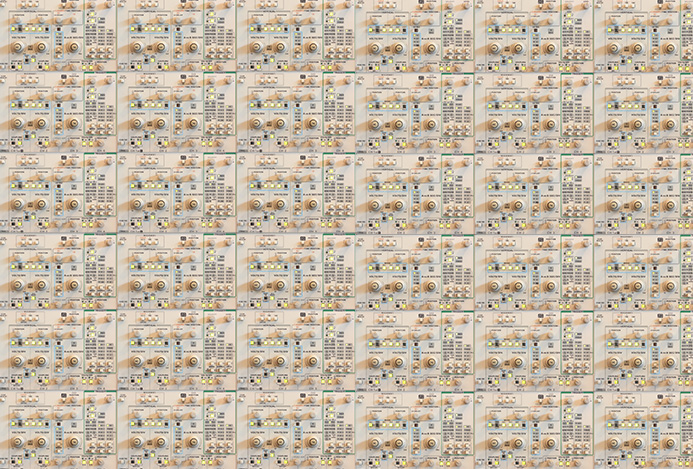

Susan: When we talk about a model and model structure the structure is the physical way that the math is laid out. In other words, this equation leads to that equation, which leads to that equation. This is the layers. But, these layers, those equations, have a whole bunch of parameters. It's the simple slope formula y=mx+b.

Scott: Just numbers.

Susan: They're just numbers. If you can get those M and Bs just right then you can fit the curve.

Scott: And there's just millions of them though.

Susan: There's millions and millions and millions of them. Those things we call parameters.

Scott: So, all these dials in the network they need to be turned.

Susan: That's actually one of my favorite images is a person sitting in front of a switch board with 10,000 knobs between 0 and 11. Every single one of those knobs affects every other knob. You've got inputs over here and you've got outputs over there. If you could just twist those knobs right here to the right step-

Scott: There is a correct one that minimizes your error.

Susan: There is a great setting of them, but finding that out is hard. So what do you do? This is where back propagation, gradient descent and all these things come into play. You send something through that model and you let it make a guess.

Scott: Leave the settings where they are and let it just go.

Susan: You look at the outputs that came in there, and you look at the truth, and you have your loss function.

Scott: You know the answer to the input that you gave it.

How far away is the output from the model compared to what the actual truth is?

Susan: You've got your loss function that's going to show you that. Now, from that loss function I can take that error and I can propagate it backwards through that network.

Scott: Essentially there's a recipe that says if we have this error down here then what you need to do is go back and turn all of these knobs this much, but it's only a little bit, each of them. It doesn't say, "Put this knob in this position." It says, "Move this one a little bit that way, move that one a little bit that way."

Susan: In every single example that goes through that it's going to say, "Hey, the knob should have been here" and "The knob's going to be there." When you've got a bunch of these examples, you take the average of a bunch of examples at once, this is what we call a batch, and now the average says, "In general, this knob should have gone over here." You do this a whole lot and eventually you get the settings for those knobs.

Scott: You don't do this once or 10 times, you do this millions of times.

Susan: In the end, it comes up with a great setting for those knobs and now the outputs are getting you pretty close to what you want.

Scott: At first there's a lot of movement, the knobs are moving all over the place and then there's slow refinement as the model starts to get trained.

Susan: There is the occasional time where it trips over and a whole bunch of them start going off into their local optimum.

Scott: Yeah because they all affect each one of them. It has to make up for that change. That's generally backpropagation.

One of the key skills is coming up with 1001 ways of thinking of that. The more ways you start thinking about how this works, the better you understand intuitively what's going on. That can help you design these things in the future.

Scott: Constraints help a lot with this:

How much money do you have?

What computing resources do you have?

What talent do you have?

You can go on many, many goose chases here, a lot of rabbit holes. You could spend the next 15 years working on a problem and never come up with something that's actually valuable. There's still many good things that you're learning along the way.

You have to learn to cut off certain things and be like, "Good enough, good enough, good enough". That's kind of the way that machine learning is now at least. You have to have some restraint in order to get a real product out the door.

Susan: We've talked a bit about designing something, but I think a lot of what people don't realize is that not only is building of them a challenge, but the world isn't static. Maintaining a deep neural network is actually a really big challenge.

Maintaining a deep neural network

Susan: Even just consider the license plate problem: every single year there's 100s of new license plates. Someone goes right to their state representatives and says, "Hey, I think the state should have this picture of my favorite cartoon character from 1983" and they get enough signatures and suddenly there's a brand new license plate in the world. Car designs change, vehicle designs change, all sorts of things change.

Scott: In California, the first digit kind of just incrementally goes up. There's a new first digit just because it's later on. It wasn't likely before, but now it's likely. The idea that you put all this time and effort and it stops, maybe there are problems out there like that, but it's pretty hard to imagine. We'll just go back to handwriting, the digit recognition. I can guarantee you that the average penmanship has changed considerably in the last 15-20 years. So, if you think that handwriting is stagnant, you're not banking on a good bet.

You need to have some way of keeping your model, keeping your environment up-to-date, and swapping it out, and keeping it trained, and topped off.

Keep the cobwebs off your model, keep your environment up-to-date.

Scott: Also presumably, you've got a model in production and now what do you do with your time? You try to make it better. Hey, I have some ideas, maybe I could do this or maybe I could do that and that will make it better. Great, but the other stuff is still operating over there. What if you make a small improvement? Okay, now there's this version change, history, and now you get into version control of your models which is not really a thing yet. People don't quite think in that way, but they certainly will have to think in that way in the future.

Susan: Well that's a really huge challenge. There's some decent articles and blog posts on this fairly recently, talking about version control and the machine learning world. That's just a big challenge. A lot of times you're talking about not just a few bytes of data here but 100s of megs, which doesn't sound like a lot but you version that 15 times a day and suddenly you're talking real data.

Scott: A gig or so. You're filling up your hard drive pretty fast.

Susan: The reproducibility of these things is a slight challenge because you can take the same exact model, structure, the same training data, the exact same training pipeline, and most of them incorporate randomness into them for very good reasons, so you'll get a different result if you train a model twice with what you thought was all the same stuff. What does it really mean to version control something is a big challenge.

Scott: The question will have to be answered in the next few years probably.

Susan: Yeah, we're seeing the evolution of the industry.

Scott: This is a long timescale thing. Hey, we're at the beginning. It's like electricity in the 1900s or something, that's AI now.

What's the key point?

Susan: The key point is you got to take real world data somehow. Don't get stuck in some training world.

Scott: You got to use real world data.

Susan: Real world data. If you can't take real world data, you don't have a useful model, useful structure. How about yourself?

Scott: Scott's key point is: try to get water through the pipes. Just get something working, anything working, and then you can iterate.

Susan: Iterate.

Scott: Iterate.

Susan: Iterate.

Scott: Then you can iterate. Hey, maybe it doesn't work very well at first, that happens for sure, but then you tweak some things, now it starts to work again. Is it all worth it to go through that?

Susan: Depends on the problem, depends on the money.

Scott: Some problems are too hard, just don't do it right now. Some problems are really easily solved with something you don't need to use a neural network for. But there's a region in between where it's like, yep, this makes a lot of sense.

Susan: If you can get 95% of the way with a simple tool, maybe you should be doing that first.

Scott: Might keep doing that, unless it's something you make billions of dollars from then hey maybe we can do something with neural networks.

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.