What Does it Mean for a Machine to Learn? — AI Show

November 2, 2018 in AI & Engineering

Scott: Welcome to the AI Show, I'm Scott Stephenson, co-founder of Deepgram. With me is Jeff Ward, aka Susan. On the AI show, we talk about all things AI. Today we're asking the very big question, "What does it mean for a machine to learn?"

Susan: What do you think it means for a machine to learn, Scott?

Scott: I think a basic way to look at it is by asking the question:

"Is a machine altered after you give it some data. Or, you expose it to an environment. Has it changed and changed for the better?"

Of course, you could take a car wash and then drive a semi truck through it, and then it just destroys the car wash, but that probably isn't better.

Susan: Well, I don't know, it's open up to the sky, the rain can come down. You've got larger volume then, right?

Scott: Yeah. But if you wanted to teach a child to read, maybe you show them, "Hey, this word means this, these sounds mean that, etc." And then they try it, and then you say, "Yeah good job," or not. Then the next time they try to read, do they get better? Do they remember some things, do they do things like that?

Scott: These are humans learning, or animals learning, doing a similar thing. It's a similar thing with a machine. Doesn't matter what the stimulus is:

Do you give it an image?

Do you give it some audio?

Do you give it some text?

Do you give it some clicking on a spot on a website when you're about to buy some shoes?

It learns from that and it gets better at doing whatever its job is. That's really machine learning.

Susan: It seems like the definition's really wrapped around one really important word: better.

How do we define better?

Susan: Better? That's a really squishy word in some certain problems. That kinda gets into what makes certain problems tractable nowadays. If we can really tightly nail down what is better, we're generally pretty good at it in the computer world and the machine learning world. But, if better is a little bit squishy, are you better at playing basketball today than yesterday? Those get into harder and harder questions, such as: "Is this a better solution to world peace?"

Scott: Things can get complicated.

What sorts of questions are a good fit for Machine Learning?

Susan: What are the kinds of problems that computers can do, or that machine learning can attack nowadays, that humans can do, and what are the things that humans can't do? Conversely, what are your thoughts on some of the ones that humans can do that machines are having a problem with today?

Scott: You have it all four ways:

There are some things humans can do and machines can do.

There are some things only machines can do, or do well, and humans can't do them very well. Examples of that would be- have a person pick up a house, machines can do that better than a human can.

Then you can have it the other way around: what can a human do better than a machine?

What can you have that none of them can do very well?

The most interesting part is probably when does a human do a better job than a machine or a machine do a better job than a human? What factors go into that? To come back to the standard topics of images, text, audio, time series, where you click on a webpage? What's the next ad I should show you?

"A human might be able to do a better job than the machine could in normal context, but they would be way too expensive to have them make that decision."

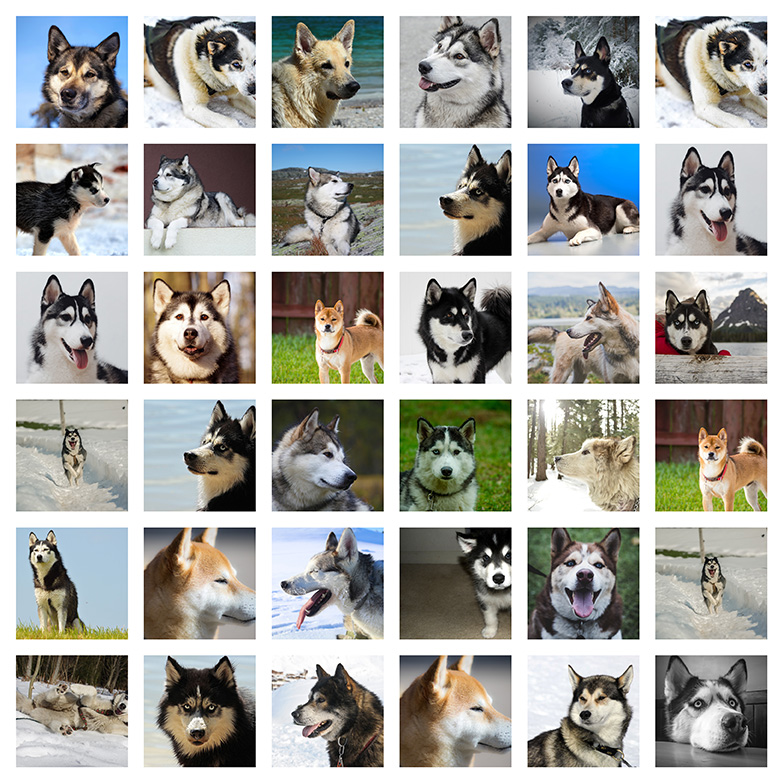

For example, if you had a person sit down and learn all of the dog breeds in the world and really study pictures and give them an infinite amount of time and pump them full of Mountain Dew and Papa John's ... Or Dr. Pepper, in your case, right?

Susan: Dr. Pepper and Cheetos, thank you very much.

Scott: Okay, sorry. And then really sit down and think about all of this, and you get all the time and all the money in the world to figure these out, and then submit your answer, they still might be able to do better than a machine. But,

a machine would be able to do that in like 500 milliseconds. "I just ran through all of them, and then here it is, and maybe I'm a few percent off, but big deal, it was super cheap for me to do it."

"In the world of machine learning nowadays, it's the big data problems that machines are starting to edge out humans on."

Scott: When you've got these tremendous data sets, like you're saying, dog breeds or something that would take an expert, a whole lot of years to get really good at, that's where machines are starting to see some good advantages.

What would a husky vs. malamute training set look like?

Scott: Also when it's complicated, but it's not that complicated.

If there's a lot of things to remember, but it isn't that they all interact with each other and form some complicated mess. It's complicated simply because of how numerous it is. That's really good for a machine to tackle that. They can handle that cognitive load of juggling a lot of different very simple things, but if they all have to interact then it usually doesn't work out as well. For example, if you wrote an article and said, "Hey machine, summarize this article for me." If a human sat down and read that article and they said, "Well, the few main things are these and here's a summarization," then you would be able to listen to that, feel like you had a good idea of what that was. Not every human would give you the same answer though, right? But a machine probably doesn't even come close nowadays, still.

Susan: I think that again you're nailing, from my point of view, one of the big distinguishing pieces here, that says where machines aren't doing well also is tied to where humans give you a lot of different answers. So, if I were to summarize a book for you, I'd get a very different summary than you, as you just pointed out. If I were to give you my impressions on a painting, is a similar example. At that core of the different answers, none of them are necessarily wrong. Some will feel more right or feel more wrong, but that's the core thing, this idea of what is better and what is worse in a squishy world.

Scott: Subjectivity is flowing in there a little bit.

Susan: That's where human problems are really working out.

"If there's some very standardized accepted answer, then you build a highly supervised learning technique, and we're starting to learn how to do that fairly well."

It's that unsupervised, semi-supervised, coming up from what the definition of good is, that machines are a little ways away on.

Scott: People are trying, there are people in the world trying to do summarization on large bodies of text and make it work.

Photo credit: Steve Jurvetson.

Susan: And they're getting better.

Scott: More progress keeps being made, but it's usually in the context of where a human could look at something fairly quickly and give you a quick snap answer on it, is where machines tend to do a fairly good job still. In the case of autonomous cars, "Hey, you're driving in a street, you see a person, should you stop or not?" Stop, pretty easy. It didn't take me very long to compute that as a human, didn't take me very long to compute that as a machine either. More in-depth problems, when I have to think about the sequences into the future, or read a long document. Then it's not such a split-second decision for me.

It takes humans more cognitive load for them to figure this problem out, but machines can't do it at all yet.

What's holding machines back?

Scott: I think there's a couple areas.

Do you have the labeled data for some of it,

Do you have the model architecture that will actually work.

In both cases there, you can find examples where you don't have enough data or any data at all. If you did have data that problem would be solved. But, then also you could find other cases where all the data in the world still isn't gonna fix a problem until you find a model architecture that can actually support that data and be trained and learn from it. When you as a human learn speech and language, you can work from a few set of small examples, you can talk to people in a normal way, and then when you hear a word that you don't understand but you can start to work out context, and maybe even weeks later you see it used in another context, etc. and you stitch it all back together, and that was a totally unsupervised way of learning what that word meant.

A child learning the meaning of the words "Share, or else."

That's how people learn a large amount fairly quickly in over 10 years of their life, and in the long tails as well, without seeing any real supervised part of it. It's a tricky problem.

Susan: It's really hard. I do think that there's an aspect, though, that's pretty important, and that is going back to the fact that humans have an ability to pick their own loss function.

This is a key to any kind of problem you've got, basically any machine learning problem, you gotta know what's better and you gotta know what's worse. We humans all have this ability to say, "I think this is better and I think this is worse," and we learn to make these loss functions a little bit better and a little bit worse. You have a different set of loss functions than I do. That is a really tight core of our ability to go into these semi-supervised and unsupervised worlds, is to develop that loss function, start understanding how we can come up with a way of attacking the problem. The first step is just say, "Did a little better, did it a little worse this time," and then changing that.

Scott: When machines are learning , that is to say: AI, deep learning, etc., they have a lot freedom in certain areas to do whatever they want to do in order to figure something out. But, they have very strict rules in other areas that are like, "The only thing that you care about is making this number go up." They don't even know what the number means, whatever, all you gotta do is make this number go up. In aggregate, maybe across your whole data set, but you gotta make that number go up. So you're very constrained on that. You can fiddle with all these numbers if you want to try to make that number go up, but that's your job.

"Humans can use common sense to be like, 'That's a stupid number to be trying to optimize,' where an AI algorithm doesn't do that yet."

Susan: That's a huge challenge: being able to figure out your own loss function. Once we can get there, I think that the research into that area will lead to good general purpose artificial intelligence, and really crack a lot of problems. As soon as we start allowing that hard, fixed loss function to be modified itself and come up with good ways of dealing with that so it doesn't get out of control and destroy everything, that will really open up a lot of the missing problems, the problems that machines, machine learning can't tackle right now.

What unexpected things should we expect from ML?

Scott: One thing we didn't necessarily talk about a lot is just what unexpected thing, or maybe unexpected, unintuitive thing will a machine be able to do better than a human in the future?

Susan: That's such a wide field. We kind of talked about a generic area, things that are big data, but I'll tell you the one that's coming up quick, and everybody sort of gets this, but I think that we'll really see when the numbers come out- self-driving vehicles. This is one of those things that people think is a highly intelligent task, but I honestly think from a machine learning standpoint we're seeing how much easier it is. Let me give you an example of how easy the task is. When you drive 150 miles, how much of that route do you remember? Almost none. Why is it? Because you've kind of automated all of that in your head.

"That kind of problem, where you can kinda automate it in your head, that means that it basically is a soluble problem so long as we can figure out those little pieces that went into it."

Computers are just gonna destroy us. They're gonna be a lot better at doing it, a lot safer, they can look a lot of different ways, they can see in 18 different spectra that we can't. They can avoid that cat and get you to your destination on time.

Scott: Same thing with figuring out, "Is this a cat or a dog?" You don't think, "Well, it's got an edge of an ear and it's got ..." It's a cat.

Susan: Definitely a cat. A machine will be like, "It's a cat."

Scott: If you don't have to think about it then yeah.

Susan: I don't think people understand it, but any kind of problem where you can just go on autopilot, where you've gotten to a level of mastery where you just don't realize it at the end of the task, those are gonna be where machines will eventually get better than us.

Susan: The problems where you are always constantly thinking and being creative and coming up with, "Oh, but we hadn't thought about this," and that takes you down a new road, "We hadn't thought about this," and it takes you down a new road, and you're mentally engaged the whole time, those are the long, long, long, hard, far away problems for machine learning.

Scott: It's not a closed system that you can just let a machine learning or AI just play in, and say, "Go figure that out." It even takes a while for humans to figure out what loss function, what reward system they would set up for a machine to go learn that.

Susan: It's gonna be interesting to see which problems start falling into that fully automated world, versus which don't. But, there is one other aspect of this, also. By automating all those problems, just to get a little off here, people are worried about machines automating everything away.

The reality is, they're gonna take the 95% that's boring and leave the 5%. That 5% will grow, and that 5% will allow us to focus on the really interesting things and not be bored all the time. We'll probably have more people saying, "I need some downtime because I'm thinking too much."

Scott: It already happened. You had mules and oxen turning mills to grind flour back in the day, or you have steam power doing things that humans would have done, or a tractor rather than having the mule go plow the field. You see those types of things already being displaced now. It freed up time for humans, and that's the evolution of things and that's gonna happen again. People that talk about the industrial revolution or the agricultural revolution displacing jobs, totally true. It does in a short period of time, but then people fill in the gap and start doing more creative things.

Treadwheels or treadmills were used in the middle ages to power cranes, corn mills, and other machinery. Today, we power them electrically so that they help us stay in shape.

Susan: We throw around a lot of terminology all the time, it might be important just to point out things like what is a loss function, when we say loss function, cost function, all these different things.

Reward functions and Loss Functions

Susan: Reward function. Generically, what we're talking about here is an observable truth and comparing our prediction to that, and how far away we are between those two. The real answer was 18.2 and we guessed 18.

Scott: There you go, you're not too far off there.

Susan: There's a certain gap right there.

Scott: But, maybe that is really far off.

Susan: It could be, depends on your scales here. Maybe you could only predict between 17.9 and 18.3.

Scott: But if it's cat or dog, you're building a cat or dog detector, or a hot dog or not, and it says 80% hot dog, 20% not, then okay fine. But, what was the original? The original was not. Then it's like, "Oh, I'm really far off. I said 0.8 on hot dog but 0.2 on not."

Scott: I should have been 1 on not and 0 on hot dog.

Susan: That's another interesting point. When we talk about how computers or machine learning predicts, generally, not every time, it's not this binary zero/one world. We turned it into that. But, a lot of problems, especially classification problems, come down to a probability of one class versus the probability of another class. When we talk about loss functions, the ultimate is to predict 100% on this and 0% on everything else. Even if you predicted 80% on the right class, there's a little bit of gap there, you should have been 100%. So, when we talk about loss, we're talking about compared to the perfect, absolute truth there.

Scott: It would be marked as 1, not hot dog, 0, hot dog. But the next example might be a hot dog, and that's a 1, hot dog, and it's a 0, not a hot dog.

Scott: But having some room for ambiguity there makes some sense. Maybe the times that it guesses half one, half the other it actually is like a half-eaten hot dog, I don't know.

Susan: There's a lot of things that are called hot dogs out there that I'm not sure are actually a meat product.

What is intelligence?

Scott: We said what is learning a little bit, but what is intelligence?

Susan: Intelligence is a really hard thing to nail down, especially when it comes to machines. There's a very good chance, a very real chance, the first alien species we'll meet will be a machine, i.e. we create it somehow and suddenly realize it's intelligent. But, there's also a really high chance we'll realize it too late, meaning it was intelligent for a while but it was so alien to us we didn't understand it.

Beluga Whales are have a particularly developed sonar system which they use for "seeing" in the pitch-black waters 1000m+ below the sea. They also use this sound system for communication. As humans, we have no appreciation for their sensory experience. Photo: <a href="https://www.flickr.com/photos/criminalintent/27640481">Lars Pougmann</a>

When we think of intelligence, we always think human intelligence.

You go out into the animal world and you see things that humans couldn't possibly do from a mental standpoint. Yet, we don't call them intelligent, at least self-aware, like we would think of as ourselves. So there is no one answer, this is what intelligence looks like.

Scott: People tend to define it, though, like what can a human do that's hard.

Can you speak some sound waves and a human can turn that into words?

Can you show a human an image and they can tell you what the object is?

These are intelligence tasks.

Susan: The big ones are, "Can you get some new thing that you have very little experience with and come out with some sort of acceptable set of answers on the other end?" That takes a really high level of function to be able to come up with something like that. That's why we're pretty far away with it in the machine learning world. Everything that we do in the machine learning world all fits within a bin, one single millimeter outside of this bin, and the machine doesn't know what to do.

Scott: I think there's an interesting point to be made about this. When there are underlying laws or rules that can't really be broken, there's chaos around those rules and it's kinda hard to figure out what they are. It's not that hard to drop something and say that things always drop. But it is kinda hard to predict the weather or something. Are there underlying rules on how that works? Yes. So essentially, intelligence may be discovering some of these laws as well. Hey, you're given this mess, but there are these underlying rules that you don't know what they are, but you start to discover them a little bit.

Susan: Figure out the system.

Scott: Take language for example. If you just started spitting out a bunch of different words randomly, it literally would be gibberish. It would take the age of the universe for you to spit out 10 paragraphs that actually make sense if you did it randomly, right? But there are some rules, there's some structure and you start to discover those things and you hang onto them. But, then you move away from that structure just a little bit and that's what creates the fuzz around the world, etc. You can look at how does the earth form and water and eddies flow. There are underlying rules of how fluids move, and how gravity affects things, and how molecules break down. Then you can say, "Look, that's how it is." But if you look from the outside, it kinda looks like chaos.

Susan: Our ability to figure out those systems and then use that knowledge to figure out the future is definitely a big portion of how we are intelligent. But again, it's really hard to say, "What will the first other intelligence we meet look like, and how will it be similar to us? How will it be different to us? Will it be extremely 'better'?" Again, defining what is better, what is worse here. That would be an interesting thing. It'll be a challenge for us. There's a lot of morals and ethics that go into this which we could probably spend hours on, just that subject alone.

Scott: There's been a lot of iteration and time for this to sink in into the data science community, tech companies. They're figuring out how to show ads to people really, really well, probably better than a human can, almost certainly. At least on the scale that we're talking about.

Google ads for the search term "advertising."

Susan: Yeah, that gets down to the notion that people are actually fairly predictable when you find a niche that they're staying in. If you get into a routine, obviously you're predictable, that's the point of routine. One of the first things I played with when I got in the machine learning world was doing a simple zero-one predictor. You would type in zeros and ones, zeros and ones, zeros and ones, and then after a certain amount of time it would guess and not tell you what it thought your next one would be, a zero or a one, etc. etc. And then at the end it would say, "I was this percent right." And it stunned me. It was like 80-some odd percent right, and I'm pulling a number out of here and suddenly...

Scott: You think you're being random.

Susan: You think you're being random, but in reality you're incredibly predictable. You're coming up with patterns all the time and following them. This is one of things we were talking about before, what is that computers and machine learning might be able to do that's pretty interesting. It's predicting humans, even though we say humans are so hard to do. Within certain scopes, humans are incredibly predictable.

Scott: Yeah, and within a certain accuracy that you're willing to give up. Hey, I don't have to be right 100% of the time.

Susan: No. If you can predict 80% of the time. That's really good for a lot of things.

Scott: And it's not gonna get tired either, the machine. But, predicting your next Amazon purchase: Hey, you go buy something and it says, "Look at these eight things," and it's like, "Actually yeah, I kinda wanted that one too."

Susan: Or more interestingly, predicting what to show you in order to guide you to a more expensive purchase.

Scott: I think now, currently, state-of-the-art, really good stuff is happening in ads and recommendation systems.

Susan: That's where the money's at.

Scott: Where the money's at and where the data's at. People interact with it and train it really well, that's for sure. Images, text is coming around, audio's coming around. Time series type stuff is coming around. Driving cars and whatnot is coming around. You have to be a little bit more forceful with them because it's harder to get the labeled data, to get the scale of data.

Susan: Although, as an interesting aside, there's some really great simulated stuff that's going on in the self-driving car world.

Scott: Oh definitely, self-driving, Grand Theft Auto V, just drive around. Why not?

Susan: Get out there and simulate the data.

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.