Use Your Voice to Draw with ARTiculate

March 24, 2022 in Project Showcase

The team behind ARTiculate wanted to increase access to artistic expression for people who can't use traditional input devices. I sat down with Dan Gooding, Max McGuinness, Tatiana Sedelnikov, and Yi Chen Hock to ask them about their project.

Since the pandemic began, drawing games and applications focused on creative expression have taken off as a way of connecting to people. However, these experiences rarely consider users with disabilities or provide a second-class experience.

The team explained, "It was apparent to us that in the domain of speech recognition for accessibility, there are many applications for practical matters like word processing and filling out forms, but far fewer for expressing yourself and creating art. We believe that this is an unfortunate missed opportunity and one we wanted to address."

Yi Chen found a paper from researchers at the University of Washington detailing VoiceDraw - a drawing application for people with motor impairments. VoiceDraw uses sounds to control the experience, such as vowels for joysticks. It is complex, powerful, but hard to learn. And, with the inspiration to speak commands to improve the learning curve, ARTiculate was born.

The Project

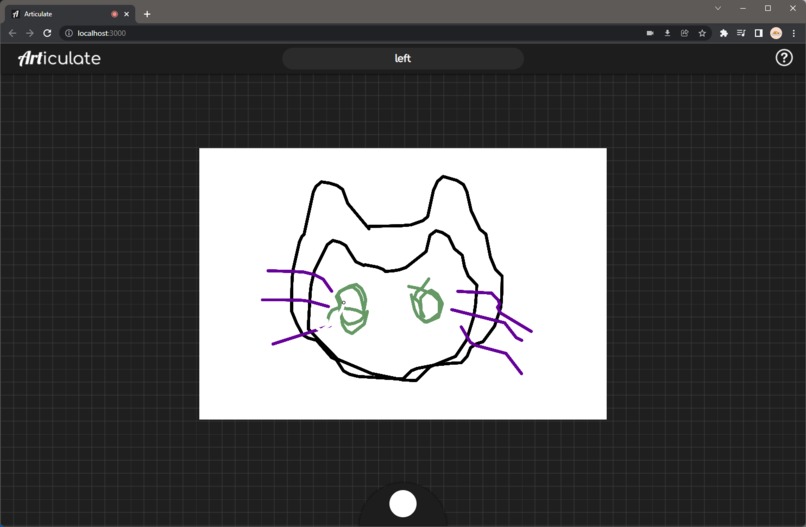

ARTiculate introduces a hands-free drawing experience. Commands like "bold," "down," and "go" control the brush. Also baked into the project are making the most of a new input modality, advanced features include voice-controlled color mixing, "shortcuts" to jump between bounded regions of the painting, and a velocity-acceleration mode for the brush.

Thanks to Deepgram's Speech Recognition API and our documentation, the team got a minimal viable project completed very quickly. Then, they expanded the use of Deepgram to utilize our search feature to find command words.

The canvas was built with P5.js, a library for creative coding in JavaScript. We just finished publishing a three-part series on using P5.js earlier this week. The team also utilized React, enabling team members to work on their own components and easily glue them together into a complete application later. Because the team created a highly-visual application, they focused attention to detail on smaller elements, such as animations.

The team has plenty of extensions planned, including the ability to fluidly pull images from online and insert them into the canvas, and additional accessibility options such as custom voice commands and color-blindness options to assist with color mixing.

You can try ARTiculate by visiting art-iculate.netlify.app.

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.