OpenAI's Whisper is an exciting new model for automatic speech recognition (ASR). It features a simple architecture based on transformers, the same technology that drove recent advancements in natural language processing (NLP), and was trained on 680,000 hours of audio from a wide range of languages. The result is a new leader in open-source solutions for ASR.

The researchers at Deepgram have enjoyed testing Whisper and seeing how it works, and we wanted to make it as easy as possible for you to try it out too. One of the things we've learned in our experiments is that, as with many deep-learning tools, Whisper performs best when it has access to a GPU. While downloading and installing Whisper may be straightforward, configuring it to properly utilize a GPU (if you have one!) is a potential roadblock.

Google Colab provides a great preconfigured environment for trying out new tools like Whisper, so we've set up a simple notebook there to let you see what Whisper can do. We set up the notebook so that you don't need anything extra to run it, you can just click through and go. The notebook will:

Install Whisper

Download audio from YouTube

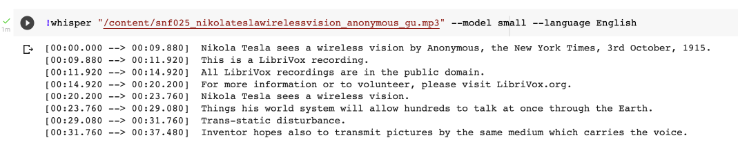

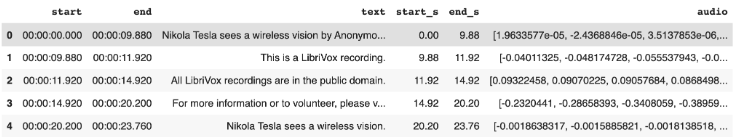

Transcribe that audio with Whisper

Playback the audio in segments so you can check Whisper's work

And finally... quantitatively evaluate Whisper's performance by computing the Word Error Rate (WER) for the transcription

We think the files we chose are fun, but if you have files that you want to test Whisper on, it should be easy to upload them and drop them in!

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.