Should AI be Regulated? — AI Show

January 11, 2019 in AI & Engineering

Scott: Welcome to the AI show. Today we're asking the question, should AI be regulated?

Susan: This is a big one-The government has figured out that AI exists.

Scott: Like, "Whoa. Wait a minute. This is important stuff."

Susan: It's like the government has realized that maybe the world can change and all sorts of things can happen. And that means we're going to regulate it. Should A.I. be there? It's going to be.

So really the question is how's it going to be? What does it take to do that? How do you even define something from a legal standpoint?

Scott: That's a pretty rough one. You can start with technology, right? Anything that is made from a computer.

Susan: We've struggled with this question multiple times on this show.

How do you define this versus that?

Susan: You go through the internets and the wikis, and suddenly there's eight different definitions for one set of tools, and over time these things blend together so seamlessly that you don't even realize where AI begins and other things begin and it's a big challenge.

Scott: I don't know. It's a big problem.

Susan: First, can we define it in a legal, well pinned down way that will be durable? So five, ten years from now this legal definition of AI can be used to then say, "This needs to be regulated in these different ways."

Scott: Probably not in any good way. You set something down now it seems all right. What are you going to do? Anything that looks smart? No, they're not going to do that. Anything that uses GPUS? Okay. Probably not. Software that gets better over time? Gets better with exposure to data? What definitions are you going to come up with?

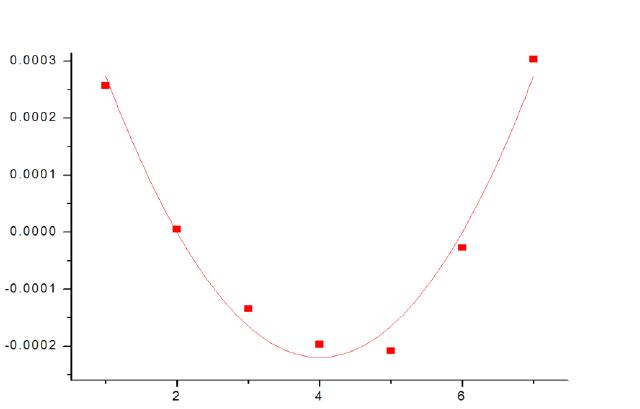

Susan: Will curve fitting in Excel suddenly be controlled? You just did linear regression on those points there. So yeah, that's a huge challenge. I think that just the beginning is fraught with danger.

Scott: I think there's no way that this could be regulated well, basically. You can regulate it, and maybe government will because of hype and things like that, but it's probably a mistake.

Susan: I'm going to take a contrary view. Some regulations have helped in the past industries.

Scott: Like what?

When has regulation helped in the past?

Scott: Like banking. No, it's true. It helps with banking.

Susan: Basically when it comes down to say, protecting consumers or something along those lines, there are examples where the regulations have helped. But this is a really big challenge here because first of all, the regulations that are starting come out are talking about export laws, export controls because of AI being weaponized. That's not really talking about consumer protections here. That's going to be really, really hard to find something beneficial for the average person coming out regulations like that. But the right legislation can also help increase an industry. If you're company x and you know regulations are coming, but you don't know what they're going to be, when they finally set down, then at least you know the playing field. So long as they're not too damaging, you can now step in understanding there's a safety net of here's my boundary, don't go past this and I can build with inside this niche. Now, the problem is we've already started talking about, can you build those boundaries in any meaningful way?

Scott: There will be regulations of some type, but there are lenient regulations and then there are real regulations where you have to do things this way and comply that way, et cetera. The US was in a more unique position in the past, in other similar revolutions. We were the leaders, and we are the ones that had the resources to go after things. In the later part of the Industrial Revolution and the Tech Evolution, we didn't have to worry so much about other players. That's not true anymore. China is a powerhouse. They have a huge population. They have their own economy. They don't have to rely on the US, at least from a tech perspective. They've got their own Twitter, their own Facebook, their own, everything. And you could say, "Oh, well they're all copies of the US." It doesn't matter. They're a huge economy there and they have tons of people and data flows freely. And models and algorithms flow freely. They're a population that's four times the size of the US, and now they're waking up too. Their middle class is only going to get bigger. In the US, we're pretty stagnant. So when you say, "Hey, we're going to start throwing the ratchets straps on everything and tie it down and everything. Nothing can leave the US." Well, that's fine, except no money will be left in the US in order to develop AI. Not none, just less. And data won't be free to train the algorithms. Meanwhile, in China, data's flowing freely, money's flowing freely, and their economy is bolstered massively. And we are here saying we're the future Europe.

Susan: It's definitely a challenge because like you said, a very good example is they have their Twitters, they have their Facebooks, but they're fairly blatant copies in some ways.

Scott: Yeah. But they work.

Susan: That's exactly the point. They don't have the controls that say, "You're not allowed to really do that." So if we start throwing down the screws, stop getting the benefits of seeing the results of their research and incorporating that in and getting that cross pollination, I do think that we could do real harm here and quickly get behind if we put on brakes that are meaningless. Say arms controls, you can stop physical pieces from leaving the country. Some of the things that it takes to build big weapon systems just can't be easily reproduced in another country. Therefore, it's a physical thing. Arms controls makes sense.

Physical objects can controlled more easily than bits and bytes on the web. Here container trucks are scanned with with X-ray machines.

Susan: But when it comes to machine learning and all that stuff, you can be an 18 year old kid and you're in your basement with a computer, and come up with some great crazy new model tweak that rivals what others are doing. It's becoming a little bit harder. I'm not saying it's that easy.

Scott: We can always do this with software though. Do we regulate software?

Susan: We do some. And how well does it work? The challenge here is that regulating something where the people you're trying to stop are already on parity with us and they're going to continue on. It just doesn't make a lot of sense.

Scott: But, okay. Regulations are coming.

Susan: Yeah, they're coming. How do you deal with it?

Scott: Are they going to go with some technological definition or are they going to say maybe, 'For civilian use you can do whatever you want, but for military use you can't." Or how are they going to drop the lines here?

Susan: There's a request or a notice talking about regulations coming, and inside of there they're discussing how to possibly define it. And then part of the comments is how should we define this and all that stuff. But there it doesn't really break down military or civilian, and you got to think a lot of the civilian stuff could easily be used for military stuff. Think about drones. Hey, I want to build a drone that does a whole lot of image recognition stuff. I want to build that farm drone I've always thought about. Well, that exact same technology could obviously easily be used for military purposes. So you can't just say, "Oh, if it's civilian, go for it", Because you can flip that switch and in 10 seconds have turned it over to military. So these regulations are not going to be easily split between civilian and military uses there.

Scott: But what are they going to regulate, the code? Like by saying: don't open-source AI anymore.

Susan: That's a real challenge. But there's also a lot of different things that regulation can touch. How they're going to do it? This is to me a huge big question for the future. I don't know. I don't personally see an easy solution here, but I can see the intents are of course, military export, import, export on military weapons. But not only that. Think about biomedical research and applications in hospitals and stuff like that. Those regulations are definitely going to happen. Maybe autonomous vehicles. Here's a set of standard data sets that your machine learning algorithms must pass to be able to be considered a drivable vehicle in California. Those types of regulations, those types of laws are clearly coming and probably are warranted. I can easily see standard data sets from the government being a form of regulation or being a form of control on these things for specific applications. But saying, "This piece of code, because it was built using some function in the toolkit of x, you can't ship it across the Internet.", those types of things are really hard to even understand how that could happen.

Scott: The closest thing I can think of that has real export restrictions on it are FPGAs. And there are really fancy FPGAs in the world that still have export restrictions on them from the US that are created in the US. It's a lot like a processor, almost as fast as a processor, but it performs like an ASIC-an application-specific integrated circuit.But, it's a programmable processor, if that makes any sense. It's a Field-Programmable Gate Array, so you can physically manipulate the gates on it. They're very small gates and there are millions of them. You can physically manipulate them in electric fields and the military doesn't want them exported... you can't ship those out of the country if they're above a certain performance level.

Though they look like "just a another chip" FPGAs are an inherently different sort of data processing device which often have military application. This is because despite their lower clock speed, they are capable of massively parallel computation.

Though they look like "just a another chip" FPGAs are an inherently different sort of data processing device which often have military application. This is because despite their lower clock speed, they are capable of massively parallel computation.

Scott: The companies that make these, like Xilinx, can't ship out their high end stuff to China. But, that's a physical object. That's hard to produce. That is done with tooling that would be very hard to replicate it somewhere else. Code is a different story.

Susan: But even on the FPGA front, honestly that seems like a technology that should be a lot more prevalent. And I can only imagine that saying above a performance level has really squashed the industry. Why try to go for that performance level if you know that you don't have a worldwide distribution for it, and that you have to go through all sorts of different regulatory hurdles? Just to even ship it within the US you're going to have to do stuff. So it probably has had a chilling effect. It's not my area, so I can't say for certain, but it just seems like there's some areas there. Another one that's pretty relevant is cryptography. That one's right in the wheelhouse here of being software. There's a long history of cryptography going all around the world. It really has not been stopped. Having limits on like bit sizes of keys and things like that, it's just ridiculous. It's like, "Oh, this program is okay, so long as you can't increase this one counter above this level." You really think that stopped its use elsewhere? It's a challenge. How are they going to identify it? What are we going to do for it? What industries, what areas is going to affect?

What about importing?

Scott: Well, we've talked about the export side of it. What about the import side? When we regulate to the point where we can't actually beat others and they have the good stuff, we'll be able to import the good stuff?

Susan: Oh well, here's another question. Just like there's data havens that are starting to pop up for GDPR and stuff like that, will we have machine learning havens? Will Amazon put the the core guts of Alexa in China? Because they can't really rip you apart there because they were able to train and do stuff they can't do here or vice versa.

Scott: Probably.

Susan: Again, that's a huge speculation craziness there.

Scott: At the end of the day, regulation, data, privacy, these things matter and you shouldn't abuse them, but people do want a better life. They do want more productivity. They do want good products. The way to get there is with data, training on it, building a smart system. The way that we used to do that is we'd just have humans do it. That's really expensive, and they can only do so much. Now we have the opportunity to have machines do things that humans could do - maybe at a hundred x the productivity, and we're worried about it.

This chart from ourworldindata.org shows us how over time workers have been able to afford more and more food for the same amount of labor. The greatest cause of this improvement is mechanization and technological improvements. AI has the same effect.

Scott: Well, you should be worried about it, but also you should think about the productivity gains that you're squashing by that and saying : more people could be fed, more people can have better health care when you let this ride for a few decades, and just bring up the rising tide lifts all boats here. Everything becomes more productive. Of course it will only be the pretty developed countries that are benefiting from it in the beginning, but that that stuff does normalize across the world. Just like mobile phones.

Susan: That's the hope.

Scott: It takes time though. It might not be in our lifetime. It might be over the next 50 years that there are AI power houses. But, this stuff relaxes. It isn't like steam ... you can't find a steam engine anywhere. Okay, it's 200 years later, everything's fine. But yeah, there are other better things.

Susan: In general we've got what's been happening more and more and faster and faster. I'm not going to go to the Kurtzweil stuff here.

Scott: Oh no.

Susan: But, society's struggling to catch up with technology. Technology used to move basically at this pace of society. As a new thing came along, we were integrating it into it over a generation.

Scott: You didn't have to change yourself very much in your lifetime.

Susan: The social norms would adapt to that one new thing, which is a little bit while ago. And now there's a new thing and a new thing, and a new thing and the new thing. And we're still trying to catch up with the fact that you can call someone anywhere in the world for basically nothing. We're still catching up with technology that came out in the '80s and '90s and finding regulations and ways of integrating the social implications of those things into us. So this is just another one that's just so massive and it's changing so fast. Going back to the definition problem, defining what is AI, what is not, what you can and can't do with it, just skip ahead to 10 years from now. The speech model that's been training off of x million hours worth of data and it's perfect and an amazing, but there's no longer tags to that data. It's just 100 megs worth of weights or something like that. Can you transfer that hundred megs worth of weights, but you can't touch the data? It's like separating those two now. You've got the knowledge store that represents an entire huge thing.

Scott: But it represents experience.

Susan: That represents a huge data cache in a lot of ways. These types of ideas of something that is small that can represent this huge thing. It's like saying, I've got my core engineer at a company and you've stolen them and moved them somewhere else. If you take the model weights, that kind of thing.

Scott: But they're replicable. They're cloneable. Instantly. Which I think is actually a really interesting thing. This is going to have to be figured out in the legal landscape. We have a new being in some sense of the word that now has a bunch of experience that is now and you can pump electricity into it and it does stuff. That can be infinitely replicated and sent somewhere else and run on other hardware. But just as you said, it's not taking the data with it anymore. It's just taking its experience. Well, hey, we already have that. It's called humans. When they go get a job somewhere, they work and they do things. Can they reveal a specific data that was in that company that they shouldn't or something? They could, but they're not going to. It's hard to remember it. You're going through lines and lines of Excel data or whatever. You don't remember it. You remember the basic stuff. You remember the platonic truths of what you were working on there. But you know what you do when you get hired somewhere else. You're taking all of those learnings and you're using them somewhere else. It's a very, very similar thing. You're transporting this around, and hey, you can learn from something over here too, and then you can go take a new job and learn from something over here. And that's the trajectory of your life. What is that? This is like a gray area for sure.

Susan: If someone steals that from your company, is it worth the storage cost... of 100 megs?

Scott: Is it kidnapping?

The idea of stealing scientists is not fiction of movies like Wild Wild West or League of Extraordinary Gentlemen, in the 1940's the goal of Operation Paper Clip was to smuggle/kidnap/liberate German Rocket scientists. Wernher von Braun was one captured scientist who merely went on to spearhead the Saturn V project which put humans on the moon.

Susan: Or is it worth billions of dollars? Like I said, we've been talking about regulations and stuff, but the legal aspects of what these new entities are is clearly a broad, wide open thing. Stealing all the code behind something is one thing, but stealing the model that took, I don't know how many GPU hours and how much data to build, that's a huge deal.

Scott: The world is changing. The world is getting a lot smaller. One thing that's true though is communication can happen roughly instantly now. You can talk and see anybody anywhere in the world for the most part. You can't just go anywhere else in the world instantly. That holds things back. That holds things back a little bit right now, where if you want to go tell your friend ... we're in San Francisco ... in New Zealand something or show up to their wedding or whatever, it's not going to be two seconds later and you're there.

Scott: And so that actually keeps some of the variants in the world still. Meaning there are still cultures.

Susan: Geography still matters.

Scott: Geography still matters. I don't come at this from the fact that ... well, the world is all the same. Hey, everybody is evolving to the point all of our cultures will just become one. I don't think that's extremely true, but I think that for the mean, is pretty true. But there are things that still stick around and this is just another step toward that essentially. Everybody becomes more ... not everybody, but all of the different cultures become more productive. They have better health, they have more free time. They have things like that. And everybody's lifted up by that. But they're not going to be identical mostly because of the geographical differences there. It's hard to move.

Susan: That's one of the few things helping to slow down this stuff is geography, terrain.

Scott: And this is where regulation's getting. Saying, "Hey, moving one thing from this place to there ... because we are us and you're them and there's a real geographical difference between us." So, that's actually going to persist. There won't, probably in the next hundred years, there won't be just one country. Right?

Susan: Yeah. Every single Sci Fi will have a ... what is the generic Sci Fi name for the one world government that comes up in all the Sci Fi stories?

Scott: What, the empire?

Susan: No. That's generally a thousand years. When you're in Sci Fi let's say it turns into an empire in a thousand years onwards. In the near term, most Sci Fi say one world government within generic 50 years.

In the Pixar Movie Wall-E, the defacto world government is the Buy N Large corporation.

Scott: Got it.

Susan: But really where we're heading is like most things, we're going to need some test cases in life to help us clarify these things. One example that's been recent is of course, Google search in China. This is clearly touching on the areas that we're talking about here. That's a test case for how we are reacting. Will we start building more and more legislation that says, "Hey Google, you can't bring your search technology into China for reason x, y, and z. Or if you do, you have to do it under these conditions"? That's actually a great example where the problem was that they were bending to another country's, like what they wanted to do, as opposed to opening up the full rein of what they could do. They were limiting themselves. But those types of tests cases are going to happen. They're probably already happening. We just aren't aware of many of them and we'll see them in the news pop up day after day and reach a certain momentum, and we'll find certain small key things that can help us improve them the next time they happen. The real challenge though is will we go too big too early? I think we both agree doing big rash things right now would probably be more harmful than helpful.

Scott: You probably want to go the soft direction versus the hard direction like the United Nations or something like that, rather than hard laws, where you put people in jail. That type kind of thing.

Susan: Especially when it's going to be fairly arbitrary, whatever definition you come up with. Suddenly just the fact that you have speech recognition in your app makes it that your app is now not allowed to be exported and it's like, "What?" That would be a huge challenge. And especially since it's being integrated in so many areas.

What are the benefits of regulation?

Susan: Well, like said before, the benefits are, it can stabilize the market. It can give stability to companies.

Scott: Like, "Hey, this new thing is here. What should we do as a company? What should we plan for? What should we-"

Susan: You know your boundaries. You can work within boundaries. That is a benefit because it reduces risk. You now know that if you stay within here, you're not going to be arbitrarily smacked. Benefits again, we talked about the consumer side of the house. Good consumer protections, well-crafted consumer protections can be hugely beneficial to consumers and also to companies. It keeps everybody on a same playing field when they have to keep consumers first to some degree.

Scott: Safe play area. It's a lot like children. "Stay on your block. Hey, you can do a lot of things in this area, but don't set things on fire. Don't stick forks into electrical outlets. Don't cross the road."

Susan: But like we talked about, even going down those routes, the fact that you start putting those things into place, you're pigeonholing down and you really run serious risks.

Naming something is a very powerful thing. As soon as you name the thing and say that something else is that name, nothing is a perfect fit. And especially in this industry that's changing so quickly so much every single day-

Scott: It's also hard to deregulate. That's not usually a thing that happens. Regulations come in and then 500 times more work goes into getting rid of them.

Susan: It's far easier to say, "Well, I'll just leave it in place and we'll add more to things." Man, it's a sticky wicket, right?

Do you think should there be punishments? What would the punishment be?

Susan: Oh. Punishments?

Scott: Is it just monetary?

Susan: This is the whole self driving car dilemma. Should you hold car company x liable for the one death a year they cause?

Scott: That's true. That's true.

Susan: Do you treat a company like an individual? Since corporations are now individuals, does that mean you can execute a company if they maliciously killed someone on the road? Can you put them in jail? And now that's another thing. Again, going back to the regulation route, I can almost guarantee you that if we had a very clear, consistent standard applied to the laws or the damages and stuff that will happen when the collision happens, even if they're a little bit "mean" to the car companies, that would allow a lot more people to enter the market. A lot more companies to enter the market, because they know they now have a known risk, as opposed to an unknown risk, which is a lot worse. What should happen if you design something that's used to kill people? I don't know, gun manufacturers probably have a lot to think about on this too. That's an example of an industry that doesn't have a lot of the repercussions, but there are a lot of other industries that do. If you make a toy that chokes one child, you've got huge, huge damages there going on on.

Scott: Well, it's like a ski resort versus the playground. Everything in a playground has to be so you can't hurt yourself, but ski resort's fine. Yeah, just send kids down. They can run into trees. Whatever. It's just history and how people work and what their norms are. It's just weird.

Susan: And it comes down to a test case hit. Street or risky resorts versus manufacturer x versus whatever, and

when that test case hit, we were forced to make a decision and that decision is stuck. Even if technology and time has changed, that decision still stands.

Kinder eggs is a good example. You can't import Kinder eggs because a kid may choke on them.

Scott: Kinder eggs are a chocolate with a toy inside, in a little egg shape and in the US, you can't get him.

Susan: Yeah. I know. My son loved them when we were living overseas.

Scott: Because if a child eats it and chokes on it.

Susan: For getting something this big, it's ridiculous.

Scott: But yeah, that's the idea.

Where do you think we'll be 20 years from now?

Scott: I think it's a decision point here. If you want the US to truly just become China's vacation spot, it's the new Europe, there wasn't really anything going on here. Everybody just chills out and coasts for the rest of their lives, then you regulate a whole bunch. And you keep your lifestyle the way it is, and that's that. If you still want to be the dominant world power in the world, you can't go hard on regulation in AI and/or software or whatever. You can't start protecting. You can't go into protect mode.

Susan: If you crack down on innovation, you're going to to kill this industry. Obviously China's been the example, but my time is Scotland, there was a lot of push to get innovators to stay. We're seeing other areas, other regions of the world that are doing a lot more than the US to encourage things. When I first came to the Bay Area was a good example. People are coming into Bay Area because that's where all the ideas were at, and that's where everybody wanted to be. There was this huge upwelling of, "This is where it's going to be at." Now it's still there, but there's a lot more people that are saying, "I'm here because of the momentum." Whereas, go to say Edinburgh and there's lot of programs. They're building that momentum. People are going there because there's a lot of press to make this stuff happen, and they're building what we are now coasting on.

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.