Building the Future of Voice - Scott Stephenson, CEO, Deepgram - Project Voice X

December 9, 2021 in Speech Trends

This is the transcript for the opening keynote presented by Scott Stephenson, CEO of Deepgram, presented on day one of Project Voice X.

The transcript below has been modified by the Deepgram team for readability as a blog post, but the original Deepgram ASR-generated transcript was 94% accurate. Features like diarization, custom vocabulary (keyword boosting), redaction, punctuation, profanity filtering and numeral formatting are all available through Deepgram’s API. If you want to see if Deepgram is right for your use case, contact us.

[Scott Stephenson:] Hey, everybody. Thank you for for listening and coming to the event. I know it’s kind of interesting. Yeah. This… for us, at least, at Deepgram, this is a first in-person event back. Maybe for most people, that that might be the case. But, yeah, I’m happy to be here, happy to meet all the new people. And I just wanna thank all the other speakers before me as well, a lot of great discussion today. And I’m also going to talk about the future of voice, not the distant, distant future. I’ll talk about the next couple years. But I’ll give you a little bit of background on myself, though, before we do that.

So I’m Scott Stephenson. I’m a CEO and cofounder of Deepgram. I’m also… I’m a technical CEO. So if people out there wanna talk, you know, shop with me, that’s totally fine. I I was a particle physicist before, so I I built deep underground dark matter detectors and and that kind of thing. So if you’re interested in that, happy to talk about that as well. But but, yeah, Deepgram is a company that builds APIs. You just you just saw some of the performance of it, but we build speech APIs in order to enable the next generation of voice products to be built. And that can be in real time. It can be in batch mode, but we want to enable developers to build that piece. So think of Deepgram, like, you… in the same category that you would Stripe or Twilio or something like that in order to enable developers to build voice products.

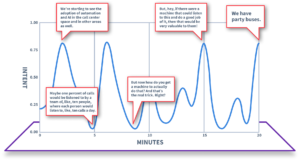

I’m gonna I’m gonna talk about something in particular, and then we’ll maybe go a little bit broader after that. But I’ll I’ll talk about something that’s happening right now, which is we’re starting to see the adoption of automation and AI in the call center space and in other areas as well. But, in particular, you see it, like, really ramping up in meetings and call center. But one thing I see is this this this this trend of the old way, which was if you wanted to figure out if you had happy customers or how well things are going or that type of thing, what you would do is ask them. You you would send out a survey. You’d try to corner them. You know, you try to do these very unscalable things or things that maybe had questionable statistics behind them on on how how well they worked. But you basically didn’t have an alternative otherwise.

And so I’m here to talk about that a little bit about what’s actually happening now is, yes, some of that old stuff is still happening, but you already hear me saying old stuff. Because what’s actually happening now is people are saying, hey. We already have piles of data. There’s phone calls happening. There’s voice bot interactions. There’s emails. There’s other things that are coming in here.

Looks like we lost our connection. I’m not sure why. K. Sorry about that. We’ll see if I can… I don’t think I hit anything, but let’s see.

Ok. So so it can be text interactions. It can be voice interactions. There’s there’s a a number of ways that customers will interact with your product. Could just be clicks on your website, that type of thing. But if if you have to ask for the survey, if you have to, you know, send a text afterward, that type of thing, then you’re gonna get a very biased response. People that hate you or love you. What about the in between? What about what they were thinking in the moment? You know, that type of thing. And so I’m I’m

I’m saying, hey. That was the old way. The new way is if if you want the act… if you want to figure out what’s going on, doing product development with your customers, see how all that goes, stop asking them and just start listening to them.

So what do I mean by that? I mean, just take the data as it sits and be able to analyze it at scale to figure out what’s going on. And so this could be a retail space, where you have microphones and people are talking about certain clothes that they want to try on. Hey. I hate this. That looks great, etcetera, that type of thing. Just listen to them in situ. If it’s a call center, somebody calling in saying, I hate this. I like this, etcetera, like, right in right in situ in in in the data, looking inside.

And there’s… essentially, for data scientists, like myself and lots of other people, they look at this huge data lake, dark dataset that previously just was, like, untouched. And maybe if you’re in the call center space, you know that there would be, like, a QA sampling kind of thing that would happen. Maybe one percent of calls would be listened to by a team of, like, ten people, where each person would listen to, like, ten calls a day or something like that. And they would try to randomly sample and and see what they could get out of it, but it’s a really, really small percentage. And, you know, it had marginal success, had some success but marginal.

And so the next step in obvious evolution there is if you can automate this process and make it actually good and and and, again, see previous demo, then you can learn a lot about it, about what they’re actually saying for real. And so now the question would be, though. Alright. That’s that’s great. You told us there was something previously that was really hard to do, and now it’s really easy to do or it seems like it’s easy to do. How does that all work? It seems hard. Well, it is hard. Audio is a really hard problem, and I’m just going to describe that a little bit to you.

So take five seconds and look at this image, and I won’t say anything. Ok. Does anybody remember what happened in that image? There’s there’s a dog, has a blue Frisbee. There’s a girl probably fifty feet in the background with a… with, like, a bodyboard. There’s another bodyboard. And I don’t know why I keep going out here. But from the from the image perspective, you can glean a lot of information from a very small amount of time. And this makes labeling images really easy and inexpensive in a lot of ways. It… trust me. It’s still expensive. But compared to audio and and many other sources, it’s it’s, like, a lot easier. But you can get this whole story just from that quick five seconds. But now what if what if you were asked to look at this hour-long lecture for five seconds and then tell me, like, what happened. Right? You know, you get five seconds. Like, I’m not gonna play, you know, biochemistry lecture for you. But but but, nevertheless, five seconds with that, you’ll get five words, you know, ten, fifteen words, like, whatever. Right? You’re… there isn’t much you can do. Right? And so, hopefully, that illustrates a little bit about audio that’s happening. It’s real time. It’s very hard for a person to parallel process.

You can you can recognize that when you’re in a cocktail-party problem, which we’ll all have later today when we’re all sitting around trying to talk to each other, many conversations going on, etcetera. You can only really track one, maybe one and a half. You know? That kinda thing. But but part part of the reason… or… you know, part of the reason for that is humans are actually pretty good in real time in conversation. They can understand what what other humans are doing, but we have specialized hardware for it. You know? We have our brain that we… and ears and everything that is tuned just for listening to other humans talk and computers don’t. They they see the image like what is on the right here. And it’s… that’s that’s not the whole, like, sixty hour lecture. That’s, like, a couple seconds of it. You know? So it’s a whole mess. Right? And if you were to if you were to try to represent that entire lecture that way, you, as a human, would have no idea what’s going on. You might be able to say, a person was talking in this area or not. That that might be a question you can answer, but but, otherwise, you have no idea what they’re saying.

But if you run it through our ears and through our brain in real time, then we can understand it pretty well. But now how do you get a machine to actually do that? And that’s the real trick. Right? And if you have a machine that can do it, then it’s probably very valuable because now you can understand humans at scale. And that’s the type of thing that we build. I’ll just point out a few of these problems again, like, in a in a call center space. Maybe you have specific product names or company names or industry terms and jargon or certain acronyms that you’re using if you’re a bank or whatever it is. There’s also how it said, so maybe you’re speaking in a specific language. English, for instance. You could have a certain accent, dialect, etcetera. You could be upset or not. There could be background noise. You could be using a phone or some other device to actually talk to people, like calling in on a meeting or something. And, generally, when you’re having these conversations, they’re in a conversational style.

It’s a fast type of speaking, like I’m doing right now. Sorry about that. But but, nevertheless, it’s very conversational. You’re not trying to purposefully slow down and enunciate so that the machine can understand you. You know? And so that’s a challenge you have to deal with. And then audio… actually, a lot of people might think of audio as not that big of, like, a file, but it’s about the third the size of video. So there’s a lot of information packed in audio in order to make it sound good enough for you to understand. And there’s different encoding and different compression techniques, and, you know, there could be several channels, like one person talking on one… like like an agent and then, like, the customer that’s calling in, so multiple channels.

And so, anyway, audio is very varied and very difficult. But the the way that you have to handle this, and I I… I’ll talk technically just for a second here. But as you need a lot of data that is labeled, but it’s not just a lot of it. Like, the hour count matters, but it doesn’t matter as much as you might think. But it definitely matters. You need a lot in different areas, so young, old, male, female, low noise, high noise, in the car, not, upset, not upset, you know, very happy, etcetera. And you need this in multiple languages, multiple accents, etcetera, and you all… you need that all labeled as, like, a a a data plus truth pair, essentially. So here’s the audio and here’s the transcript that goes with that audio, and then the machine can learn by example. And you can kind of see that if you can read it here.

Audio comes in labeled, and that’s converted into training data. And then that makes a trained model, which you use, like, convolutional neural networks, dense dense layers or recurrent neural networks or attention layers or that type of thing is just kind of… I think of it like a like a periodic table of chemical elements, but, instead, it’s just for deep learning. You have these different things that care about spatial or temporal or that type of thing. But, nevertheless, mix those up, put them in the right order, expose them to a bunch of training data. And then you have a general model and… which is if many, if people in here have used APIs before or, you know, you’ve used your, like, keyboard on your phone, then, generally, what you’re using is is a general model, which is a a model that’s a one size fits all or jack-of-all-trades. Not necessarily a master of one, but it… it’s it’s designed to hopefully do pretty ok with the general population.

But if you have a specific task, like you’re our customer, like Citibank, and you’re like, hey. I have bankers, and they’re talking about bank stuff. And we wanna make sure that they’re compliant in the things that they’re doing, then you can go through this bottom loop here that says taking new data and label that to do transfer learning on it. So you have a… your original model that is already really good at English or other languages, and then now you expose it to a specific domain and then serve that in order to accomplish to your task.

So, anyway, if you wanna talk about the technical side of it later, I’m happy to happy to discuss, but that’s how it all works under the hood. But now you can get wet… you can get rid of the old way, or keep doing it if if you find value in it, but add the new way, which is doing a hundred percent analysis across all your data. Do it in a reliable way. Do it in a scalable way and with high accuracy. And so I think you you guys… Cyrano, one of our customers, you just saw the demo. We’re we’re pumped to have them, and we can discuss a little bit later. Again, we have a booth back there. But… about the use case there, so I I won’t take too much time talking about them, but another company that maybe some have heard heard of in the audience is Valyant.

And Valyant does fast-food ordering. So… well, it doesn’t have to be fast food. It could be many other things, but, typically, it’s fast food. So think of driving through and you’re in your car or you’re on your mobile phone or you’re at a kiosk, and you’re trying to order food inside a mall or something like that. And you have a very specific menu items, like Baconator or something like that. And you’d like your model to be very good at those things in this very noisy environment. And how do you do that? Well, you just saw magic. You have a general model, and then you transfer learn that model into their domain, and then it works really well. And another place that this works really well is in space.

So NASA is one of our customers. They they do space-to-ground communication. So we worked with the CTO and their team at the Johnson Space Center in Houston in Houston. And what they’re trying to do is under… essentially, there’s always a link between the international space station and ground. And there’s people listening all the time, like actual humans. Three of them sitting down and transcribing everything that’s happening. And, hey, can we, like, reduce that number to, like, two people or one person or zero people or something like that. And this is the problem that they’re working on, and it’s it’s a… it’s a big challenge, and their their CTO says, hey. The problem’s right in our faces. We have we have all of seventy five hundred pieces of jargon and acronyms, and it’s a scratchy, you know, radio signal, and it’s it’s it’s not a pleasant thing to try to transcribe. But, hey, if there were a machine that could listen to this and do a good job of it, then that would be very valuable to them. And so, anyway, they they tried a lot of vendors.

And as you can maybe imagine using a general model approach, which is what pretty much everybody else does, then it doesn’t work that well. But if you use this transfer-learning approach, then it works really well handling background noise, jargon, multi speaker, etcetera. And, yeah, happy to talk about these use cases later if you want to. But… so, yeah, moving on to act three here a little bit, which is just a comment on what’s happening in the world. We’re in the middle of the intelligence revolution, like it or not. There was the agricultural revolution, industrial revolution, information revolution. We’re in the intelligence revolution right now. Automation is going to happen. Thirty years from now we’re gonna look back and be like, oh, yeah. Those were the beginning days. You know? Just like if anybody in here was around when the Internet was first starting to form. You remember, you know, when you got your first email account, and you’re like, holy shit. This is crazy. You know, you can talk to anybody anywhere.

And, anyway, same… we’re we’re in that we’re in that point in time right now. And another comment is that real time is finally real. A couple of years ago, it wasn’t, and now it actually is. That’s partially because the models are getting good enough to do things in real time, but it’s also partially because the computational techniques have become sophisticated enough, but not as heavy lifting plus other… like, NVIDIA has gotten good essentially at processing larger model sizes and that type of thing. So, essentially, confluence of hardware plus software plus new techniques being implemented in software can make real time… high-accuracy real time actually real. And then these techniques, if you’re if you’re in a general domain, you can get, like, ninety percent plus accuracy with just a general model.

Or if you if you need that kind of accuracy, but it’s kind of a niche domain, then you can go after a custom-trained model. And this stuff all works now, basically. So a lot a lot of times come… people come to me and say, like, hey. Is it possible too? And the the… in audio, the answer is almost like it… it’s almost always yes, unless it’s something that a human really can’t do. Like, can you build a lie detector? Like, well, I mean, kind of. Yes. But as as well as maybe you could as a human. You know? May… I mean, not quite that well, but close. Right? But think about all the other things that a human can do. Like, they can jump into a conversation, tell you how many people are speaking when they’re speaking, what words they’re talking about, what topic they’re talking about, that type of thing.

These things are all possible, but it’s still kind of, like, the railroad was built across the United States, but there’s a whole bunch of other work that has to be done and so all that stuff is happening now. So, yeah, the the people who win the west, you know, over the next couple decades will be the ones that empower the developers to build this architecture to… you know, the the railroad builders and the towns that sprout up along those railroad lines. But it’s going to be in compliance spaces, voice bots, call centers, the meetings, podcast. It’s gonna be all over the place. I mean, for voice, it’s just like a… it’s the natural human commit.

I’m up here talking right now. Right? Like, this is the natural communication mechanism, and it just previously was enabled by connectivity. So you could call somebody. You could do that you could do that type of thing, but there was never any automation or intelligence behind it, and now that’s all changing. And, you know, our lives are gonna change massively because of it. And I think the productivity of the world is gonna go up significantly, which previous, you know, talks that we heard today we’re all hinting at that. You know, our lives are gonna change a lot. So I’d… I I don’t know if there’s… if I if… maybe people are tired of hands. I don’t know. But are there start-up founders in the room here? Like, any… ok. Cool. So if you’re if if you’re into voice and you’re a start-up founder, you might wanna know about this.

So Deepgram has a start-up program that we gave away ten million dollars in speech-recognition credit. And what you can do is apply. Get up to a hundred thousand dollars for your start-up. And the reason we do this is to help push forward the the new the new products being built. I I don’t I don’t know if people are quite aware of what happened with AWS, GCP, Azure, etcetera over the last ten, fifteen years. But they gave away a lot of credit, and a lot of tech companies benefited as they grew their company on that initial credit. And, of course, you know, for Deepgram, the goal here is if you grow a whole bunch and become awesome then, you know, great. You’re you’re a customer of Deepgram, and we’re happy to help you. And another one.

So tomorrow evening, we have… how far away is it? A mile, but we have buses. We have party buses. Ok. So tomorrow evening, we’re going to… we… we’re gonna have a private screening of Dune. So if if you haven’t seen Dune yet, now’s an opportunity to do it at a place called Suds n n Cinema Suds n Cinema, which is a pretty dope theater, and we could all watch Dune together if you wanna come hang out and do that. We also have a booth. And we have a special… a a new a new member of our Deepgram team. We call him our VP of Intrigue. If you want to come meet him, come talk to us at the booth. I won’t say anymore. But thank you, everybody, for listening to the talk, and I I appreciate everybody coming out to hear it.

To learn more about real-time voice analysis, check out Cyrano.ai.

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.