The History of Automatic Speech Recognition

January 15, 2021 in Speech Trends

The most exciting time to be in the Automatic Speech Recognition (ASR) space is right now. Consumers are using Siri and Alexa daily to ask questions, order products, play music, play games, and do simple tasks. This is the norm, and it started less than 15 years ago with Google Voice Search. On the enterprise side, we see voicebots & conversational AI, and speech analytics that can determine sentiment and emotions as well as languages.

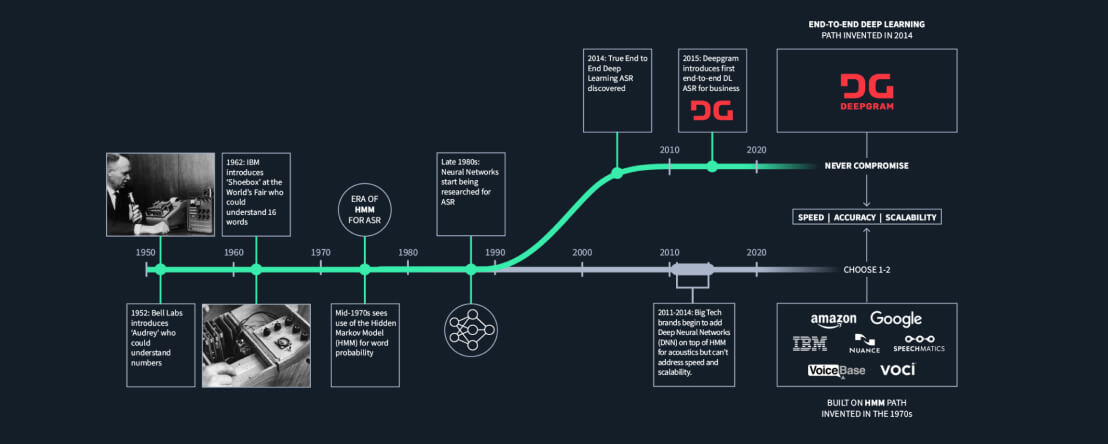

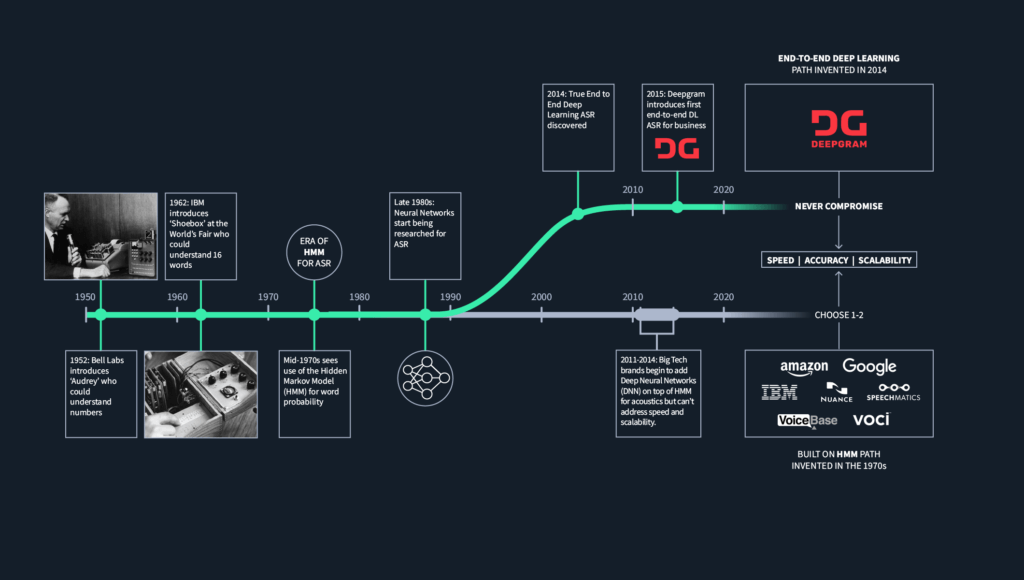

Early Years: Hidden Markov Models and Trigram Models

The history of Automatic Speech Recognition started in 1952 with Bell Labs and a program called Audrey, which could transcribe simple numbers. The next breakthrough did not occur until the mid-1970 when researchers started using Hidden Markov Models (HMM).HMM uses probability functions to determine the correct words to transcribe. These ASR speech models take snippets of audio to determine the smallest unit of sound for a word or what is called a phoneme. The phoneme is then fed into another program that uses the HMM to guess the right word using a most common word probability function. These serial processing models are refined by adding noise reduction upfront and beam search language models on the back end to create understandable text and sentences. Bean search is a time-dependent probability function and looks at the transcribed words before and after the target word to find the best fit for the target word. This whole serial process is called the "trigram" model, and 80% of the ASR technology currently being used is a refined version of this 1970's model.

New Generation of ASR: Neural Networks

The next big breakthrough came in the late 1980s with the addition of neural networks. This was also an inflection point for ASR. Most researchers and companies use these neural networks to improve their current trigram models with better upfront audio phoneme differentiation or better backend text and sentence creation. This trigram model works very well for consumer devices like Alexa and Siri that only have a small set of voice commands to respond to. However, this model is not as effective with enterprise use cases, like meetings, phone calls, and automated voicebots. The refined trigram models require huge amounts of processing power to provide accurate transcription at speed. Businesses need to trade speed for accuracy or accuracy for costs.

New Revolution in ASR: Deep Learning

Other researchers believed that neural networks were the key to having a new type of ASR. With the advent of big data, faster computers, and graphical processing unit (GPU) processing, a new ASR method was developed, end-to-end deep learning ASR. This new ASR method could "learn" and be "trained" to become more accurate as more data is fed into the neural networks. No more developers re-coding each part of the trigram serial model to add new languages, parse accents, reduce noise, and add new words. The other big advantage of using an end-to-end deep learning ASR is that you can have the accuracy, speed, and scalability without sacrificing costs.

History of Speech Recognition and Hidden Markov Models

This is how Deepgram was born; out of research that did not look at refining a 50-year-old ASR model but starting from deep learning neural networks. Check out the entire history of ASR in the image above. Contact us to learn how you can decrease word error rate systematically without compromising speed, scale, or cost, or sign up for a free API key to get started today.

Newsletter

Get Deepgram news and product updates

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .

More with these tags:

Share your feedback

Was this article useful or interesting to you?

Thank you!

We appreciate your response.